This blog was written by Akshat Gupta an Agora Developer Evangelist Intern who had started his journey in Agora as a Superstar. Akshat specialises in Frontend Web technologies and has written several blogs and developed multiple projects, helping developers understand how the Agora Web SDKs can be integrated onto their applications.

Learn how to make a real-time translation service using the Agora Web SDK and Google Cloud.

Introduction

Doing business globally is a goal for almost every company. The chance to scale up to an international level can increase profits but may require knowledge of multiple languages to communicate with clients or partners from around the world.

Getting an interpreter to help translate multilingual video conferences is impractical, because it may be annoying and will make the meetings longer than needed. You may also want to keep some information confidential.

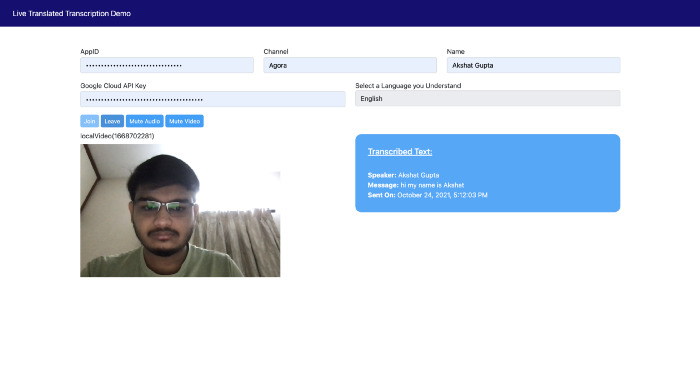

In this tutorial, we will develop a web application that supports speech-to-text transcription and translation using JavaScript’s Web Speech API, the Agora Web SDK, the Agora RTM SDK, and the Google Cloud Translation API to avoid dependency on translators and remove the language barrier during video calls.

Prerequisites

- Basic knowledge of how to work with JavaScript, JQuery, Bootstrap, and Font Awesome

- Agora Developer Account — Sign up here

- Know how to use the Agora Web SDK and the Agora RTM SDK

- Google Cloud account

- Understand how to make requests and receive responses from REST APIs

Project Setup

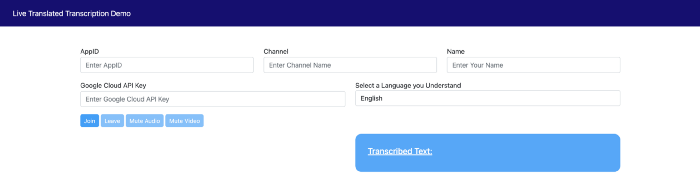

We will build on our existing project: Building Your Own Transcription Service Within a Video Call Web App. You can begin by cloning this project’s GitHub repository. You will now have a project that looks like this:

We will now remove the self-note related HTML and the extra buttons. If you face difficulties understanding what the above code does, see this tutorial.

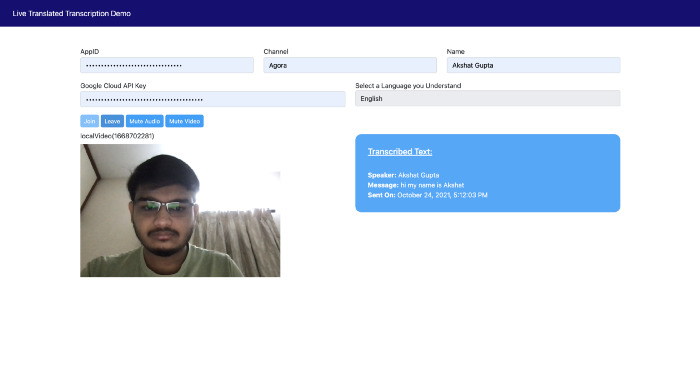

I have also added code for muting and unmuting video and audio to the video calling application. You can learn more about muting and unmuting from the Agora documentation. Your code will now look like this.

You now have a fully functional transcription service along with muting and unmuting capabilities.

Adding Real-Time Translation to Our Application

We will now add the following code into our HTML file under the existing input field row and add an option for a user to enter their Google Cloud project’s API key.

Create a Google Cloud Translation API Key

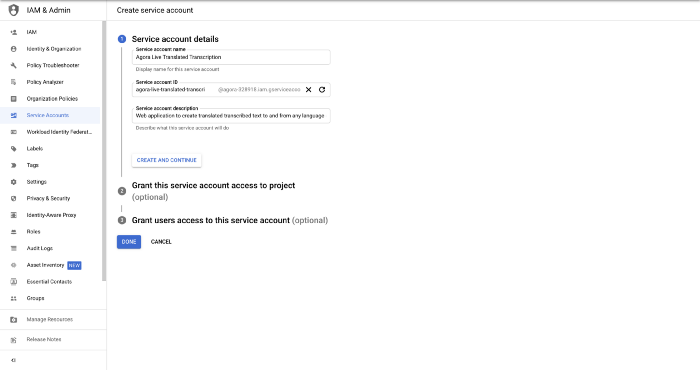

- In the Cloud Console, go to the Create service account page.

- Select a project.

- In the Service account name field, enter a name. The Cloud Console completes the Service account ID field based on this name.

- In the Service account description field, enter a description. For example, Agora Live Translated Transcription.

- Click on Create and Continue.

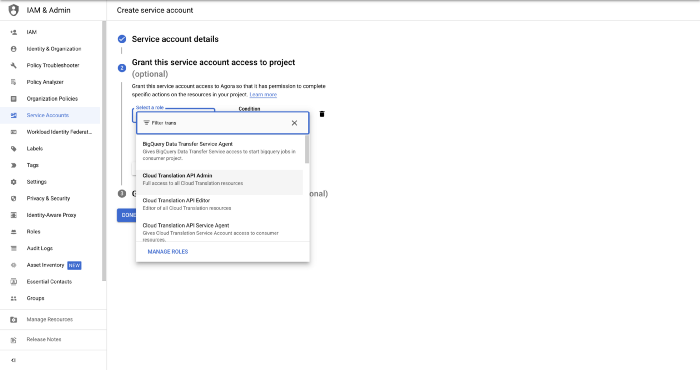

6. Click the Select a role field and choose the Cloud Translation API Admin role.

7. Click Continue.

8. Click Done to finish creating the service account.

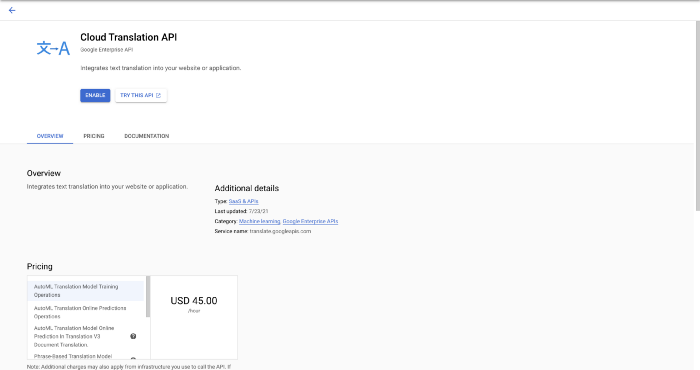

9. Enable the Cloud Translation API from here.

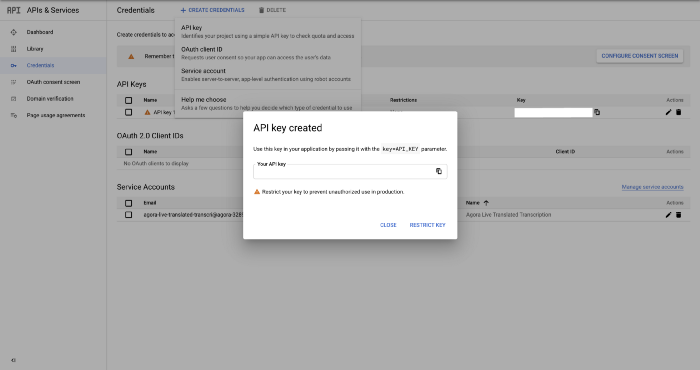

10. Click the Credentials tab in the left sidebar, and then click on Create Credentials.

11. Create and copy the generated API Key.

Core Functionality (JS)

Now that we have the basic structure laid out as well as the keys generated, we can begin adding functionality to the translation service. It may look intimidating at first, but if you follow GCP’s official docs, it’ll be a piece of cake.

The code below takes in the user’s inputted GCP key and the user’s preferred transcription language. As soon as the user stops speaking, their words are transcribed in the chosen language using JavaScript’s Web Speech API.

This same message is sent in the speaker’s language to all users through the Agora RTM SDK. When this message is received, we check for the receiver’s preferred language and use the Google Translate API to convert the original sent message to user-understandable text. This way, even if the remote user has a different language from the local user, the logic would still work as expected.

Note: For testing, you can use two or more browser tabs to simulate multiple users on the call.

Conclusion

You did it!

You have successfully made a multilingual transcription service inside a web video call application. In case you weren’t coding along or want to see the finished product all together, I have uploaded all the code to GitHub:

You can check out the demo of the code in action:

Thanks for taking the time to read my tutorial. If you have questions, please let me know with a comment. If you see room for improvement, feel free to fork the repo and make a pull request!

Other Resources

To learn more about the Agora Web SDK and other use cases, see the developer guide here.

You can also join our Slack channel: