Agora provides powerful, real-time communication SDKs that make adding high-quality audio, video, and interactive broadcasting functionalities to your applications simple.

We’re excited to launch a new Video SDK for React in beta. It is built on top of our robust Web SDK and exports easy-to-use hooks and components for making React a first-class citizen.

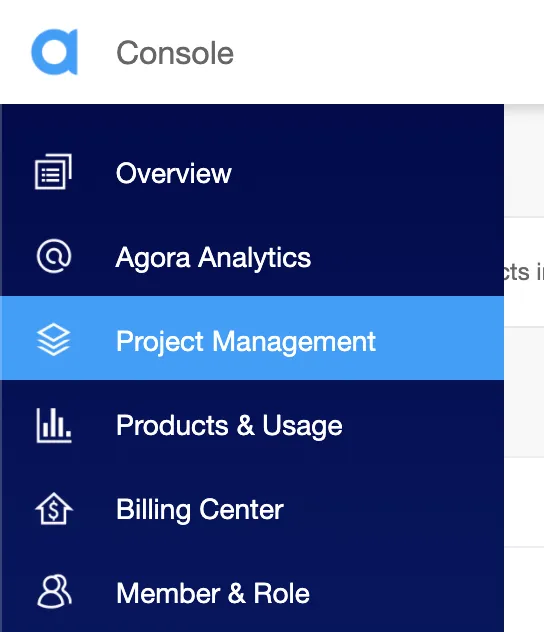

Sign up for an account and log in to the Agora Console.

Navigate to the Project List tab under the Project Management tab, and create a project by clicking the blue Create button. (When prompted to use App ID + Certificate, select App ID only.) Retrieve the App ID, which will be used to authorize your requests while you’re developing the application.

Note: This guide does not implement token authentication, which is recommended for all RTE apps running in production environments. For more information about token-based authentication in the Agora platform, see this guide: https://docs.agora.io/en/Video/token?platform=All%20Platforms

The source for this project is available on GitHub, you can also try out a live demo. To follow along, scaffold a React project (we’ll use Vite):

npm create vite@latest agora-videocall — — template react-tsagora-videocallcd agora-videocallnpm i agora-rtc-react agora-rtc-sdk-ngnpm run devWe’ll start in the App.tsx file, where we will import the dependencies we’ll use in our application:

We initialize a client object and pass it to the useRTCClient hook. Let’s set up our application state:

channelName: Represents the name of the channel where users can join to chat with one another. Let’s call our channel “test”.AppID: Holds the Agora App ID that we obtained before from the Agora Console. Replace the empty string with your App ID.token: If you’re using tokens, you can provide one here. But for this demo we’ll just set it as null.inCall: A Boolean state variable to track whether the user is currently in a video call or not.Next, we display the App component. In the return block, we’ll render an h1 element to display our heading. Now, based on the inCall state variable, we’ll display either a Form component to get details (App ID, channel name, and token) from the user or display the video call:

For the video call interface, we wrap our video call component in the AgoraRTCProvider component, to which we pass in the client we created previously. We’ll create a <Videos> component next, to hold the videos for our video call. We’ll display an End Call button that ends the call by setting the inCall state to false:

We can use the useRemoteUsers hook to access the array containing other (remote) users in the call. This array contains an object for each remote user on the call. It’s a piece of React state that gets updated each time someone joins or leaves the channel:

We’ll use the usePublish hook to publish the local media tracks (microphone and camera tracks). To start the call we need to join the channel. We can do that by calling the useJoin hook. We pass in the AppID, channelName, and token as props.

We can access the remote users’ audio tracks with the useRemoteAudioTracks hook by passing it the remoteUsers array. To listen to the remote users’ tracks, we can map over the audioTracks array and call the play method:

We’ll check if either the microphone or the camera is still loading and render a simple message:

Once the tracks are ready, we’ll render a grid with videos of all the users in the channel. We can render the user’s own (local) video track using the LocalVideoTrack component from the SDK, passing it the localCameraTrack as the track prop:

We can display the remote users’ video tracks using the RemoteUser component. We’ll iterate through the remoteUsers array, passing each user as a prop to it:

For the sake of completeness, here’s what the Form component looks like:

I’ve also put together a utility to render a simple grid of videos. Here’s what that function looks like:

That’s all it takes to put together a high-quality video conferencing app with the Agora React SDK. We’ve barely scratched the surface in terms of what’s possible. You can learn more by visiting the docs and our API reference.

We’re looking for feedback on how we can improve the SDK in this beta period. Please contribute by opening issues (and submitting PRs) on our GitHub repo.