.svg)

.svg)

.svg)

.svg)

.svg)

.png)

.png)

.png)

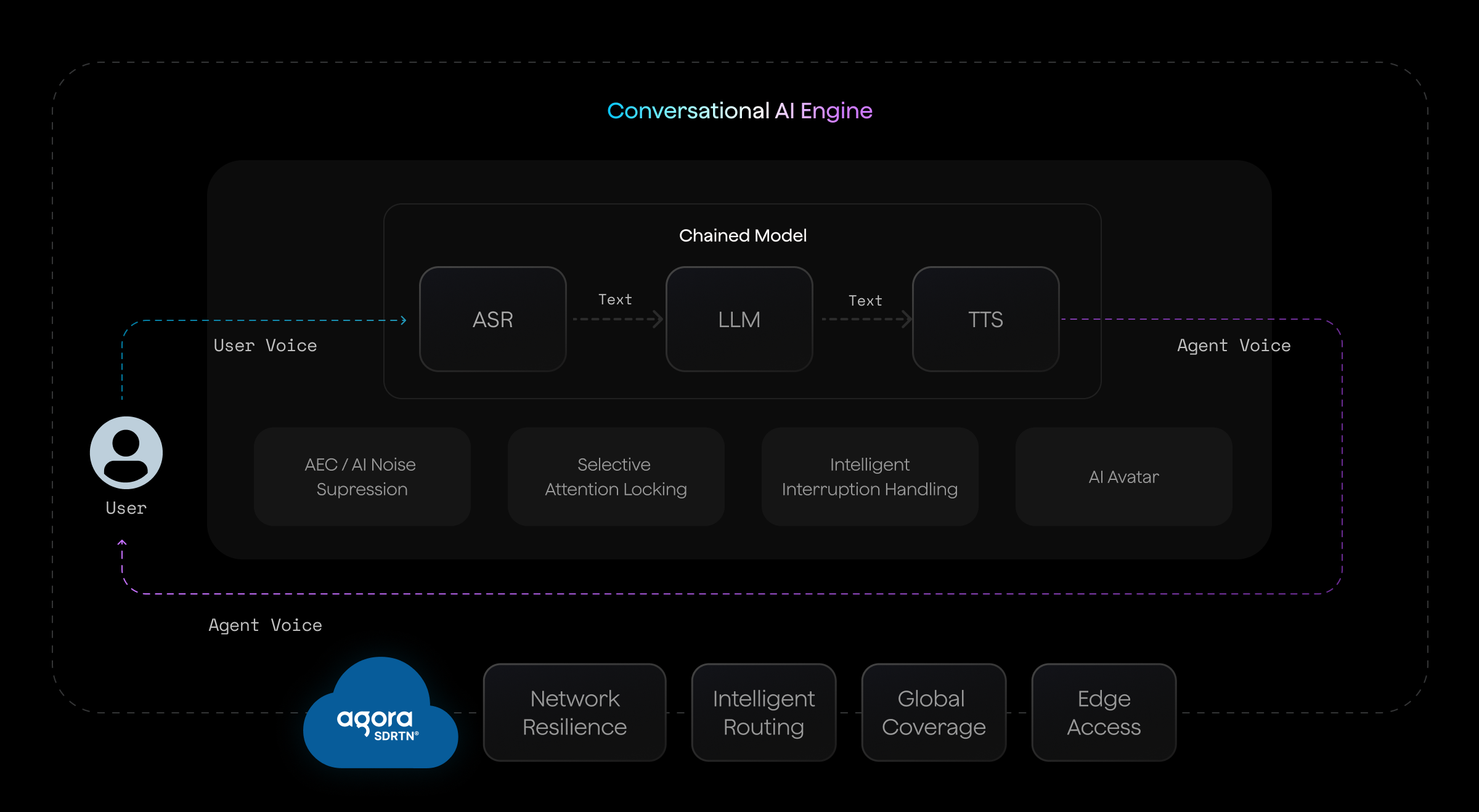

Agora enables more natural voice conversations with AI, thanks to low-latency responses and real-time interruption handling. Agora’s built-in background noise suppression, echo cancelation, and selective attention locking allow AI to hear the user clearly in any environment. Agora’s global real-time network ensures connectivity and performance in any location.

Agora's Conversational AI Engine offers support for a wide range of large language models (LLMs), including:

Review our documentation on connecting LLMs here: https://docs.agora.io/en/conversational-ai/models/llm/overview

Agora’s Conversational AI Engine currently supports the following ASR providers:

Review our documentation on connecting ASR models here: https://docs.agora.io/en/conversational-ai/models/asr/overview

Agora’s Conversational AI Engine currently supports the following TTS providers:

Review our documentation on connecting TTS models here: https://docs.agora.io/en/conversational-ai/models/tts/overview

Agora’s Conversational AI Engine currently supports the following AI avatar providers:

Review our documentation on connecting avatar providers here: https://docs.agora.io/en/conversational-ai/models/avatar/overview

To implement a voice AI agent, you need to connect an LLM and a text-to-speech service to Agora’s Conversational AI Engine. This enables full customization of the experience, with the LLM and voice of your choice.

The chained or cascade model refers to the processing flow of the user’s voice being processed by automatic speech recognition (ASR) technology that converts speech to text, then that text being processed by the LLM, then the LLM’s response being processed by text-to-speech technology and ultimately outputting the AI agent’s voice response.

No, Agora’s Conversational AI Engine requires an existing AI model or LLM. The Engine enables customized voice interaction with the LLM but is not capable of creating or training an LLM.